April 1, 2015

Dehydration for OSB: How to Run Long Transactions and Not Run Out-of-Memory

Offload the currently unused in-memory XMLs to a persistent storage, like BPEL does. Download examples.

Slow backends can kill the JVM if they are used in a composite service.

The data are accumulated in the service while the backend service is taking its time to respond.

Make the service slow enough and the data big enough, and the heap will be all consumed up.

Can we do something about it?

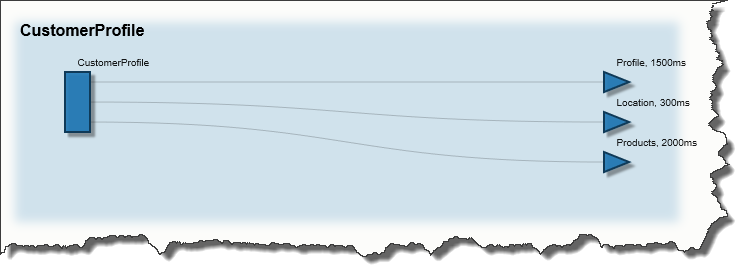

Case Study: CustomerProfile Service

A composite service is the one that calls more than one backend service and then combines the results into a response that the consumer wants.

Some initiated backend calls complete faster than others. All the time until the last call is complete, the data just sit there, not used, but occupying the precious memory.

This is true even for parallel calls, but it becomes most harmful for sequential executions.

Take this schematic CustomerProfile service:

A composite service collects data from many backends. [/caption]

A composite service collects data from many backends. [/caption]

This CustomerProfile service:

Collects the basic profile information from Profile backend service and stores it in memory.

Uses profile to retrieve the location-specific data from Location service and stores it in memory.

Based on profile and location, gathers the available products and services.

Finally, merges all three data sources into one response and provides it to the consumer.

A Single Slow Backend Holds All Data in Memory

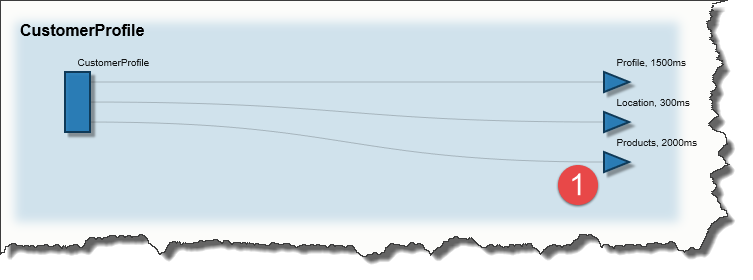

What if Products service is slow? Say, instead of usual 2000ms response time, it now responds in 10000ms?

A typical CustomerProfile service can wait quite for long for all the required data to come in.[/caption]

A typical CustomerProfile service can wait quite for long for all the required data to come in.[/caption]

Why, we get 5 times more data stored in memory! (5 times more requests are in progress now).

You can estimate the memory usage by multiplying the response size by 4x. E.g. a response of 100K will take about 400K in memory after it is parsed.

This may not be an issue for smaller services, true.

However, some of the services I deal with return responses of up to 5MBytes(!). Letting this amount accumulate in memory can kill OSB by going out-of-memory.

Larger than usual memory use would also affect the performance due to memory allocation overhead.

Should We Tighten the Timeouts? No.

An obvious quick-and-dirty solution to this is reducing the timeout for the Product service. If it can’t respond in 3000ms, cancel it!

Yes, this will prevent the OOM, but some of the consumers will start getting failures when calling CustomerProfile. This is not good, because, in fact, we may still have a lot of resources (memory) to serve the requests.

The timeout solution is too blunt.

BPEL Avoids it by Dehydrating

Let’s take a look at our SOA neighbour, BPEL.

BPEL is designed to execute long-running transactions. You may not believe it, but it is totally normal for a BPEL instance to run for days or even weeks!

Read about BPEL correlation ids if you want to know how they work around read timeouts and network drops.

Just like an OSB request, an BPEL instance must have all the responses accumulated to do the job. With those uber-long transactions, BPEL must be using a LOT of memory, right?

It would, if not for dehydration.

Before performing any request, BPEL engine saves all the data in the current process and persists them into an out-of-process storage (normally a database). Then it removes them from the memory.

Now, no matter how long the request takes, there is no impact on memory.

When the call is completed, the data are read back from the storage and the process resumes.

Smart, eh?

Emulating Dehydration

We can implement the dehydration in OSB, too!

It will take a manual step, and we will have to decide when and what to dehydrate, but it is totally feasible. All we need to do is:

- Before making a potentially long call, serialize the large data into an on-disk file. We’ll only keep a token (the file name) in memory.

- After the call, de-serialize the data back from the file.

I have made a small test Java helper class that does the work for me.

Please note the code is simplified and has no error handling.

package com.genericparallel;

import java.io.File;

import java.io.FileInputStream;

import java.io.FileOutputStream;

import java.io.ObjectInputStream;

import java.io.ObjectOutputStream;

import java.util.UUID;

import org.apache.xmlbeans.XmlObject;

public class Dehydrate {

// I do not bother with much checks because it is only a test code

public static String dehydrate(XmlObject xml) throws Exception {

if( xml == null ) return null;

String key = UUID.randomUUID().toString();

File file = new File(key);

ObjectOutputStream oos = new ObjectOutputStream(new FileOutputStream(file));

oos.writeObject(xml);

oos.close();

return key;

}

public static XmlObject hydrate(String key) throws Exception {

if( key == null ) return null;

File file = new File(key);

ObjectInputStream ois = new ObjectInputStream(new FileInputStream(file));

Object xml = ois.readObject();

ois.close();

file.delete();

return (XmlObject)xml;

}

}

The dehydrate() method:

- takes XML (say, a Profile response)

- generates a random token

- serializes the XML into a file named after the token

- and returns the token back to the caller.

The hydrate() method does the opposite operation:

- takes a token

- reads XML from the disk and returns it.

For our CustomerProfile project, I’d call dehydrate() method before invoking the Products service. I’d dehydrate both Profile and Location responses, and replace their OSB variables with the resulting tokens:

$profile = dehydrate($profile) // here $profile is an XML response

$location = dehydrate($location)

After the Product service is completed, I shall restore the XMLs into memory:

$profile = hydrate($profile) // here $profile is a token

$location = hydrate($location)

How long the serialization and de-serialization takes? It depends on the storage, but in my tests I've got 10ms to 50ms per 1 MByte.

You can find an example implementation in the JAR under Example/Dehydration project.

A Proof Dehydration Does Help Stability & Performance

I have made a test project where an entry proxy was calling 2 other services, ServiceA and ServiceB.

ServiceA was returning a large 500K response fast, and the entry proxy was keeping it in memory.

ServiceB’s response was small, but the response time was about 10s.

Using JMeter, I ramped up a load to the entry proxy from 1 thread running in a loop to 100 threads. Every second I was adding one thread.

The test server had only 512M heap allocated.

Here are the results:

| Implementation | Result | Average Response Time | Maximum Invocations |

|---|---|---|---|

| Baseline | Failed with OOM | 39s | 210 |

| De-Hydration | No Failures | 15s | 1200+ |

As you can see, not only the de-hydration prevented OOM, but it also kept the response time numbers much lower!

About Me

My name is Vladimir Dyuzhev, and I'm the author of GenericParallel, an OSB proxy service for making parallel calls effortlessly and MockMotor, a powerful mock server.

I'm building SOA enterprise systems for clients large and small for almost 20 years. Most of that time I've been working with BEA (later Oracle) Weblogic platform, including OSB and other SOA systems.

Feel free to contact me if you have a SOA project to design and implement. See my profile on LinkedIn.

I live in Toronto, Ontario, Canada. ![]() Email me at info@genericparallel.com

Email me at info@genericparallel.com